The Azure Data Factory is a cloud-based, code-free ETL and data integration service from Microsoft that acts as a Platform-as-a-Service (PaaS). It focuses on the seamless integration of data from multiple sources in a centralized data store in the cloud. This enables efficient management and analysis of data, regardless of its origin. The code-free nature of the service makes it much easier to develop and maintain data pipelines.

A central aspect of Azure Data Factory is the consolidation of structured and unstructured data in a central repository, facilitating consolidation and standardization. Through integration with various cloud computing services, the service enables flexible and scalable data processing. These services are used to perform transformations and analyses, ensuring optimal performance and scalability.

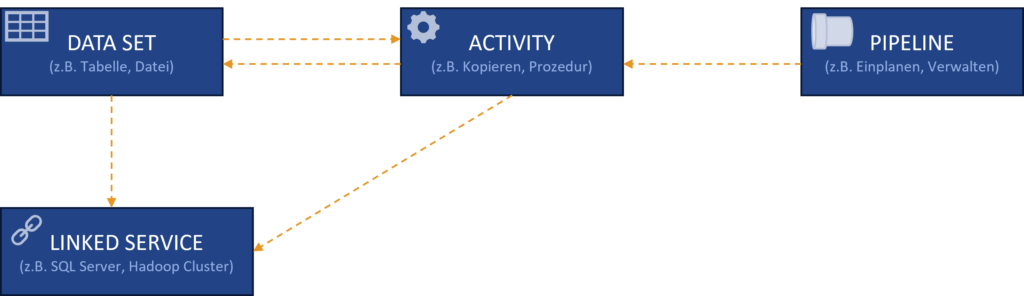

Azure Data Factory core components

The Azure Data Factory can have multiple pipelines, which are logical groupings of activities. A pipeline combines activities that perform a task together, such as copying data from a SQL Server to Azure Blob Storage and processing it with a Hive script on an Azure HDInsight cluster. Datasets are named views of data that reference the input and output data used in activities. Before creating a dataset, a linked service must be created that defines the connection information to external resources. For example, an Azure Storage Linked Service links a storage account, while an Azure Blob Dataset represents the blob container and folder within that account that holds input blobs for processing. Linked services are therefore the key to the connection between the data factory and external data storage.

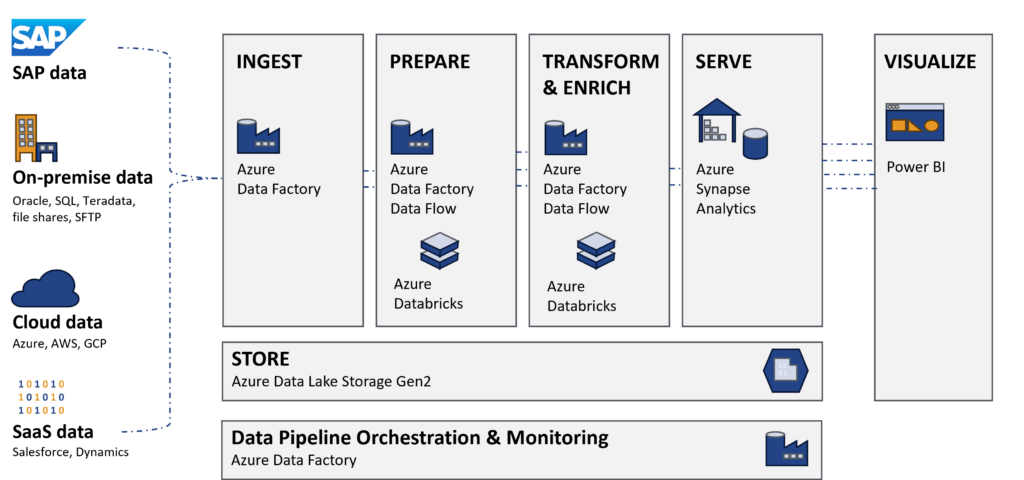

SAP data in the Azure Data Factory

In a data integration scenario from SAP and non-SAP systems, a modern data warehouse as a lakehouse offers an efficient solution.

The option of a pushback in SAP BW enables further linking and reporting.

This enables the co-existence of Azure Data Factory and an SAP Business Warehouse, for example.

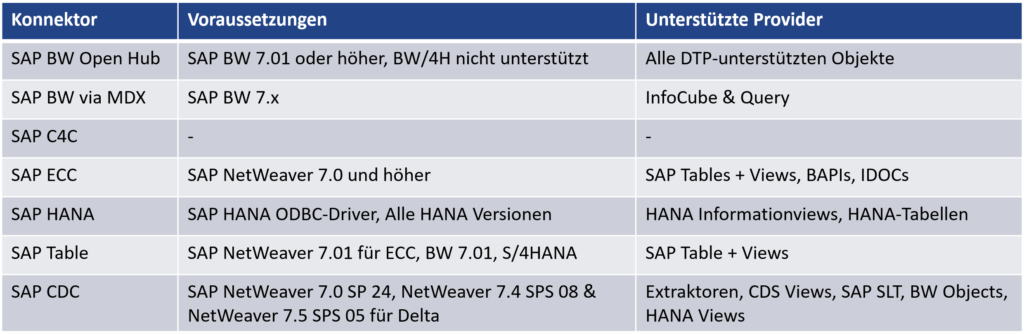

Overview of SAP connectors in Microsoft Azure

Various connectors are available for connecting SAP systems to the Azure Data Factory. The following table provides an overview of these.

Many of the connectors have some limitations. These include limitations in terms of functionality, the number of supported objects, or performance. However, only the SAP CDC connector offers the option of implementing a fully-fledged delta load.

This makes the SAP CDC connector mentioned above particularly interesting, as it accesses the Operational Data Provisioning framework (ODP) and therefore enables completely new applications.

The ODP framework and the integration of the SAP CDC connector into the architecture are presented below.

Operational Data Provisioning - Framework

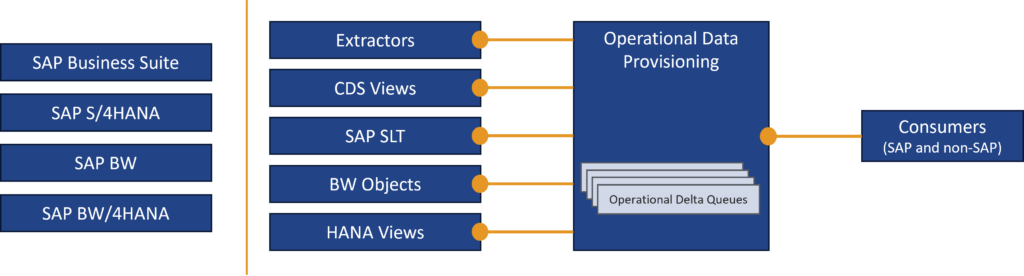

The aim and task of the ODP framework is to identify new and changed data records in the source. This is particularly necessary in the case of very large source tables where regular full extraction is not feasible. The ODP framework provides new and changed data records as data carriers for the target systems via the Operational Delta Queues. The target can be SAP or non-SAP systems.

On the source side, all ODP-capable sources can be used, including SAP S/4HANA or SAP BW. The following diagram illustrates the structure.

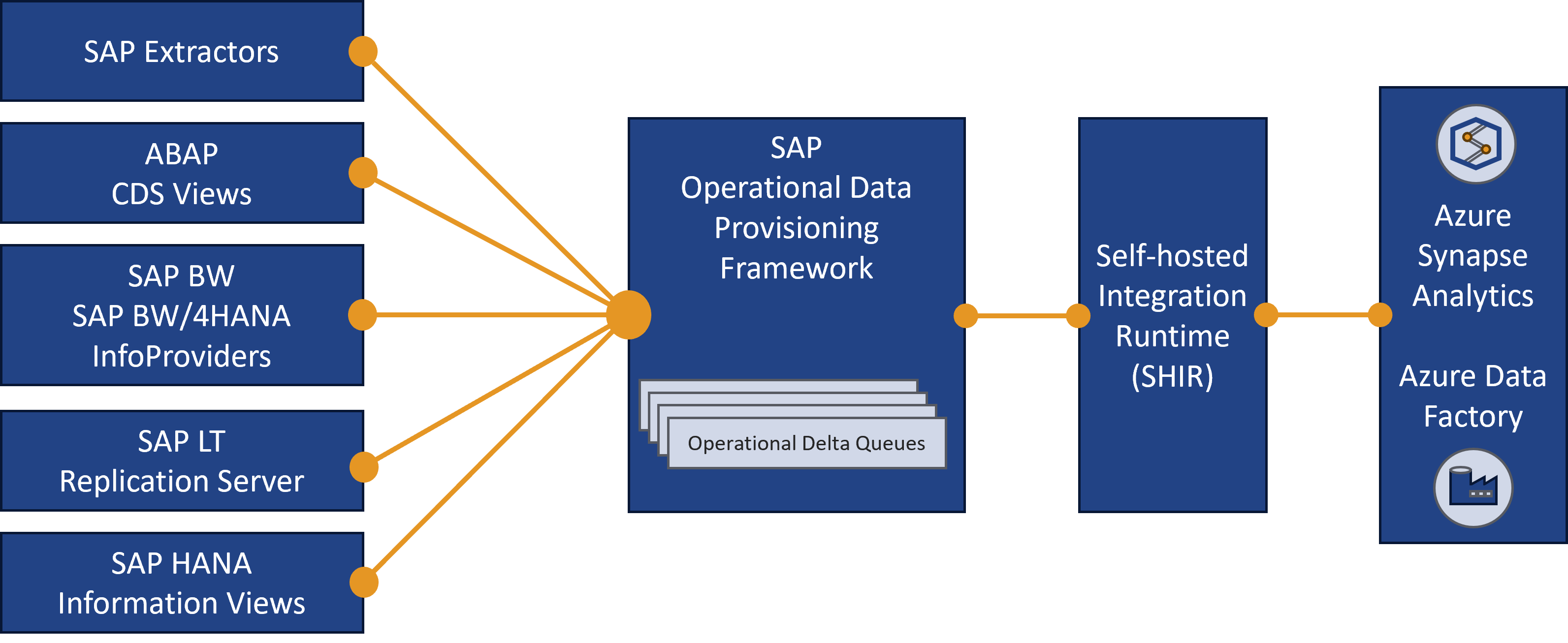

The integration into the Microsoft architecture is shown in the following illustration:

SAP Change Data Capture (CDC) uses the ODP framework for real-time data integration between SAP and Azure. The self-hosted Integration Runtime acts as a link, ensures a secure connection, and enables real-time data exchange. SAP DataSources serve as providers, while Azure Dataflows act as subscribers to the Operational Delta Queues. This structure enables efficient collection and processing.

SAP does not offer support for the use of its ODP framework by third-party products (see SAP Note 3255746). However, the CDC adapter is officially provided by Microsoft and is of course further developed and maintained by the manufacturer accordingly, so that appropriate support is available in the event of an error.

Example scenario

This chapter is intended to show a concrete scenario for using the SAP CDC connector in the Microsoft Azure Data Factory. Sales documents from S/4HANA and CRM data from Hubspot, for which Microsoft also provides a connector, serve as the data basis.

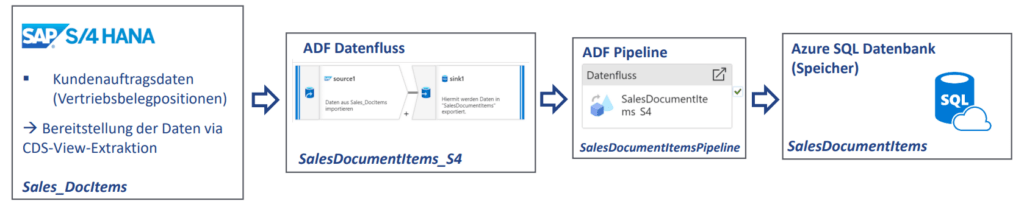

S/4HANA data processing

A CDS view serves as the basis, providing the necessary information from the various tables on the source side. This eliminates the need to join data in the Azure Data Factory. Although this is possible, it is significantly more time-consuming compared to preparation in S/4.

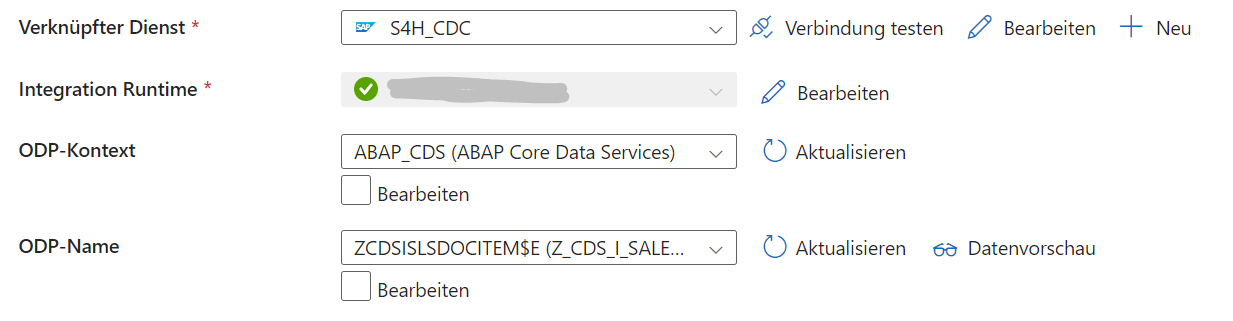

A data flow is then created in the Azure Data Factory, consisting of a source and sink dataset. The source dataset contains information on the ODP context.

The copy process from the source dataset (S/4HANA ODP connection) to the sink dataset (SQL table) is then defined in the Azure Data Factory data flow. The delta execution is also set here in the settings.

The generated data flow is then inserted into an Azure Data Factory pipeline. This pipeline can be executed manually in debugging mode or scheduled periodically for productive operation. As a result, the table defined in the sink dataset is populated on the SQL server.

Hubspot data processing

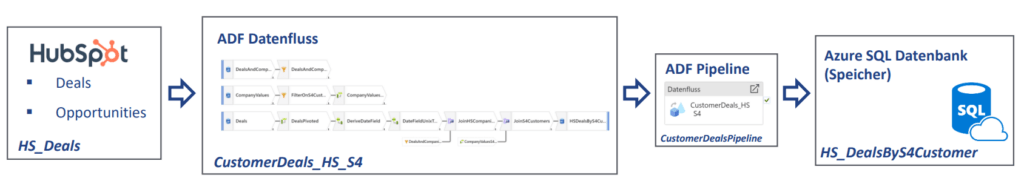

On the Hubspot side, deals and opportunities are loaded into the Azure Data Factory. The Hubspot connector is based on the REST API but is already preconfigured. It should be noted that the data is stored in a star schema. Mapping tables must therefore be used to obtain the customer numbers for the deals. The pivoting of the tables and the joining then takes place in the data flow.

The pipeline has the same function as in S/4 data processing. After execution, the data is stored in the defined table on the SQL server.

Further use

Now that both sets of data are available, they can be used for reporting.

This can be done directly in Microsoft Power BI, for example, or via a detour in Azure Analysis Services. This is an in-memory database from Microsoft, which can then be integrated natively into Microsoft Power BI.

As the data is stored in an SQL database, other front ends can also be used.

Conclusion

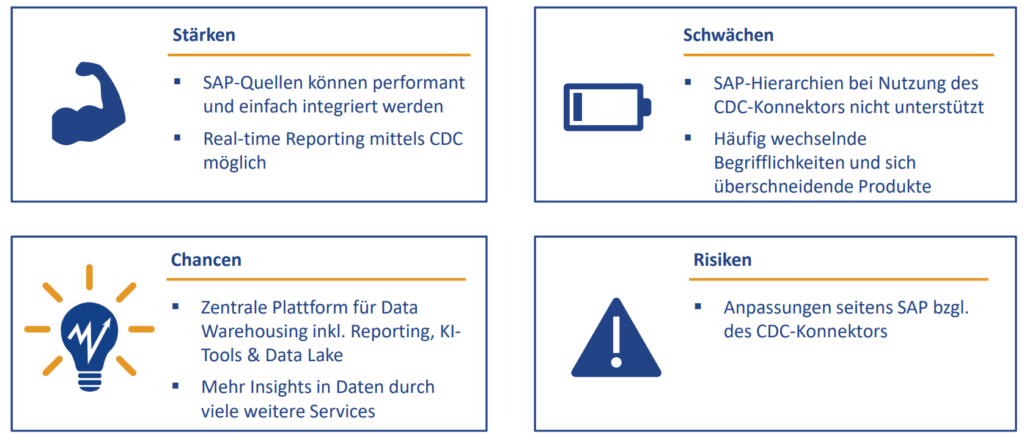

The example shows that data models with SAP and non-SAP data can be set up quickly and code-free using the SAP-CDC connector and the Azure Data Factory. The following illustration provides further information for classification.

Outlook

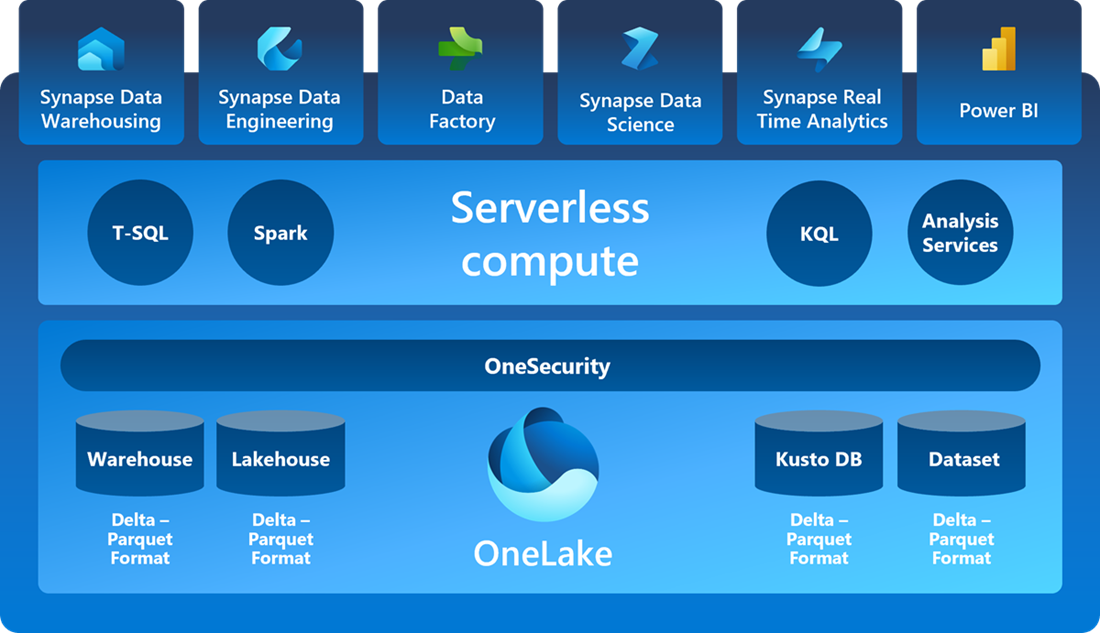

Microsoft has introduced its own data fabric called “Microsoft Fabric”. This is a comprehensive all-in-one SaaS solution for modern data warehousing (DWH). Currently, in the preview phase, it offers specialized product components, although some, like Data Factory, differ from the standalone version. Despite its development phase, Fabric promises an efficient solution for comprehensive data management and modern data warehouse requirements.

The SAP-CDC connector presented here is not yet available in the Data Factory within Microsoft Fabric.

https://learn.microsoft.com/de-de/fabric/get-started/microsoft-fabric-overview

Within the SAP world, the software company also offers the option of extracting data via ODP in CDC mode with the cloud data warehousing solution “SAP DataSphere”. So-called replication flows are used for this purpose, which organize the control and data transport.

Arrange your appointment now Expert Call. We look forward to hearing from you.